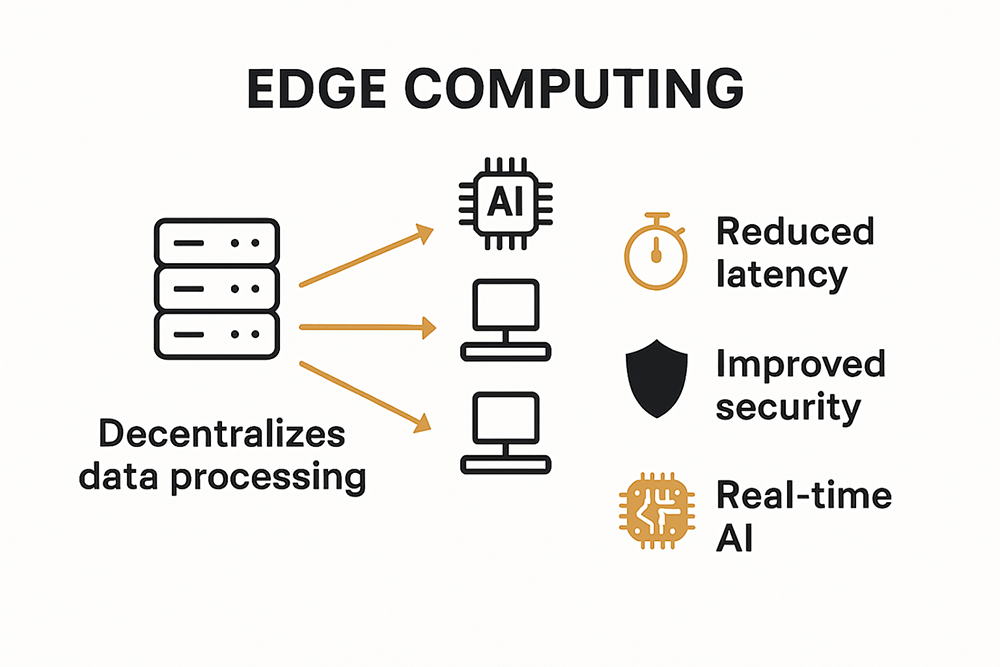

Edge computing is pushing AI and high-performance computing to the next level. By 2025, experts predict the market for edge computing will surge past $100 billion worldwide, completely changing how and where data gets processed. Most people think speed is the big story here. Turns out, decentralization and tighter security might be even more disruptive, making tomorrow’s data centers unrecognizable from today’s.

| Takeaway | Explanation |

|---|---|

| Edge Computing Will Decentralize Data Processing | The shift from centralized to distributed architecture enhances responsiveness by processing data closer to its source. |

| Energy Efficiency is Crucial for Future Hardware | New hardware designs focus on maximizing performance while minimizing energy consumption through advanced features like AI-optimized processors. |

| Security Must Adapt to Decentralized Systems | As workloads become distributed, enterprises need to implement stronger security measures and granular access controls to protect data effectively. |

| Integration Challenges Will Need Comprehensive Solutions | Organizations must address interoperability and model optimization to successfully adopt edge computing and improve performance consistency. |

| Adopting Strategic Infrastructure Planning is Key | Tailored strategies for resource allocation and scalability are essential to leverage edge computing effectively and meet departmental needs. |

The future of edge computing represents a critical transformation in how artificial intelligence and high-performance computing (HPC) systems process and analyze data. As computational demands continue to escalate, edge computing emerges as a pivotal technology that decentralizes computing power and brings intelligent processing closer to data sources.

Traditional centralized computing models are rapidly giving way to more distributed architectures. Learn more about advanced computing infrastructure reveals that edge computing is fundamentally redesigning how computational workloads are managed. The European Commission’s Horizon Europe program highlights a strategic approach to integrating computing technologies across IoT devices, edge computing, cloud, and HPC platforms.

The evolution centers on creating more responsive and efficient computing ecosystems. According to Arm’s technology insights, the key focus is developing energy-efficient solutions that can support intelligent applications across robotics, AI, and complex computational domains. This shift means moving beyond traditional data center models to more flexible, distributed computing architectures that can process data closer to its origin.

Edge computing for AI and HPC faces significant technical challenges. The primary objective is to balance computational power with energy efficiency and low-latency processing. The UK Compute Roadmap demonstrates a substantial investment strategy, allocating approximately 3 billion to develop advanced computing infrastructure that supports next-generation AI and HPC requirements.

The computational landscape is rapidly transforming, with edge computing positioned to revolutionize how AI and HPC systems interact with data. By bringing computational intelligence closer to the source, organizations can achieve unprecedented levels of responsiveness, efficiency, and insights across various domains ranging from scientific research to industrial automation.

As we approach 2025, the convergence of AI, HPC, and edge computing will continue to redefine technological capabilities, offering more adaptive, intelligent, and decentralized computational frameworks that can respond to increasingly complex computational challenges.

The hardware landscape for edge computing in 2025 is undergoing a transformative revolution, driven by the increasing demand for intelligent, energy-efficient, and high-performance computing solutions. As artificial intelligence and high-performance computing continue to push technological boundaries, specialized hardware emerges as the critical enabler for next-generation edge computing capabilities.

Edge computing hardware is rapidly evolving to meet the complex computational requirements of AI and HPC applications. Explore advanced computing infrastructure insights reveals the critical role of specialized processing units. According to IEEE’s comprehensive hardware review, the key hardware trends for 2025 include:

| Hardware Type | Key Benefit | Example Application |

|---|---|---|

| AI-Optimized GPUs | High energy efficiency for AI workloads | Real-time video analytics |

| Neuromorphic Processing Units | Ultra-low power, brain-inspired learning | Autonomous robotics, sensor networks |

| Edge-Specific AI Accelerators | Real-time, low-latency decision-making | Smart manufacturing, IoT devices |

| Multicore Edge Processors | High parallelism with minimal footprint | Edge servers, distributed control systems |

| Hybrid CPU/GPU/AI SoCs | Flexible, adaptive workload support | Industrial automation, edge gateways |

The future of edge computing hardware centers on achieving an unprecedented balance between computational power and energy consumption. Multicore processors and advanced system-on-chip (SoC) designs are becoming increasingly sophisticated, enabling more complex computations with minimal power requirements. This trend is driven by the need to support increasingly demanding AI and HPC applications across diverse environments.

The emergence of hybrid computing architectures represents a significant breakthrough. These systems combine traditional computing elements with specialized AI and machine learning accelerators, creating more flexible and powerful edge computing solutions. Manufacturers are investing heavily in hardware-software co-optimization, ensuring that computational platforms can seamlessly adapt to the evolving demands of AI and HPC workloads.

As we approach 2025, the hardware shaping edge computing will be characterized by unprecedented levels of specialization, efficiency, and intelligence. The convergence of advanced processing technologies, energy-efficient designs, and adaptive computing architectures promises to unlock new possibilities in distributed computing, enabling more responsive, intelligent, and decentralized computational ecosystems across various industries and research domains.

The landscape of data centers and enterprise workloads is experiencing a profound transformation driven by edge computing, artificial intelligence, and high-performance computing technologies. As computational demands become increasingly complex, organizations are reimagining their infrastructure to support more distributed, intelligent, and responsive computing ecosystems.

Distributed Intelligence and Workload Optimization

Explore enterprise computing strategies reveals the critical shift towards more adaptive computing models. According to research in Future Generation Computer Systems, enterprise workloads are increasingly being distributed across hybrid architectures that blend centralized data centers with edge computing capabilities.

| Feature | Traditional Data Center Approach | Edge-Optimized (Distributed) Approach |

|---|---|---|

| Resource Allocation | Centralized, fixed | Dynamic, location-aware |

| Latency | Higher (data travels farther) | Lower (processing closer to source) |

| Scalability | Incremental, hardware-based | Modular, software-defined |

| Security Model | Perimeter-based | Granular, context-aware |

| Flexibility | Less adaptive to workload changes | Highly adaptive and responsive |

As enterprise workloads become more decentralized, security and data governance emerge as paramount concerns. The traditional perimeter-based security models are giving way to more sophisticated, intelligence-driven approaches that can adapt to the dynamic nature of distributed computing environments.

The evolution of data centers is fundamentally about creating more intelligent, responsive, and efficient computing platforms. Enterprises are moving beyond traditional monolithic infrastructure to more agile, distributed systems that can rapidly adapt to changing computational demands. This shift is driven by the need to support increasingly complex AI and high-performance computing workloads that require unprecedented levels of computational flexibility and efficiency.

As we approach 2025, the boundaries between traditional data centers, cloud computing, and edge computing continue to blur. Organizations are developing more holistic approaches to computational infrastructure that prioritize adaptability, intelligence, and real-time responsiveness. The result is a new paradigm of enterprise computing that can support the most demanding workloads while maintaining optimal performance, security, and efficiency.

The adoption of edge computing solutions represents a complex strategic journey for organizations seeking to leverage advanced AI and high-performance computing capabilities. As enterprises navigate this transformative landscape, they encounter a multifaceted array of technological, operational, and strategic challenges that require sophisticated approaches to implementation.

Explore digital transformation strategies highlights the critical importance of strategic infrastructure planning. According to the Financial Times research, deploying AI infrastructure involves nuanced decisions across computing power, data storage, chip selection, and energy efficiency. Organizations must develop tailored strategies that align edge computing solutions with specific departmental requirements and computational needs.

The integration of edge computing solutions introduces significant technical complexities. Research from arxiv.org emphasizes the emergence of edge-cloud collaborative computing (ECCC) as a pivotal paradigm for addressing modern intelligent application requirements. This approach integrates cloud resources with edge devices to enable efficient, low-latency processing while introducing sophisticated challenges in model deployment and resource management.

The transition to edge computing requires a holistic approach that transcends traditional technological boundaries. Organizations must develop comprehensive strategies that balance technological capabilities, operational constraints, and strategic objectives. Scientific computing research underscores the urgent need for creating scalable frameworks where high-performance computing and artificial intelligence can collaborate efficiently.

As enterprises approach 2025, successful edge computing adoption will depend on organizations ability to navigate technological complexity, manage resource allocation strategically, and create adaptive computational ecosystems. The most effective strategies will prioritize flexibility, continuous learning, and a nuanced understanding of how edge computing can transform computational capabilities across various domains.

The future of edge computing lies not just in technological implementation but in developing intelligent, responsive infrastructures that can dynamically meet the increasingly sophisticated computational demands of modern enterprises.

Edge computing is a decentralized computing model that processes data closer to its source instead of relying on a centralized data center. This approach enhances speed, reduces latency, and improves the efficiency of data handling, particularly for AI and high-performance computing applications.

By 2025, edge computing is expected to revolutionize AI and high-performance computing by decentralizing data processing, improving responsiveness, and enabling real-time data analysis. It will lead to energy-efficient hardware designs and more secure computing environments as workloads shift closer to data generation points.

Key hardware advancements include AI-optimized GPUs, neuromorphic processing units, and edge-specific AI accelerators. These specialized architectures are designed to maximize energy efficiency while meeting the demanding computational needs of AI and HPC applications.

Organizations face several challenges when adopting edge computing, including ensuring interoperability between diverse systems, optimizing computational models for distributed architectures, and maintaining performance consistency across decentralized infrastructures.

You just read how edge computing will reshape AI and HPC, bringing decentralization, rapid data processing, and advanced hardware into the spotlight. With these exciting advancements come urgent challenges: scaling infrastructure, maintaining energy efficiency, and adapting to decentralized, distributed environments. If your organization is planning to handle next-generation AI workloads or prepare for the shift to responsive edge ecosystems, you need specialized, scalable infrastructure solutions right now.

Start optimizing your deployment with transparent access to verified, enterprise-grade GPU servers, AI-ready systems, and HPC hardware on NodeStream’s trusted marketplace. Take the lead and streamline your AI and HPC strategy by browsing our real-time inventory and rapid fulfillment options. Everything you need to prepare for the edge revolution in 2025 is just a step away. Visit NodeStream and stay ahead of tomorrow’s performance and efficiency requirements.