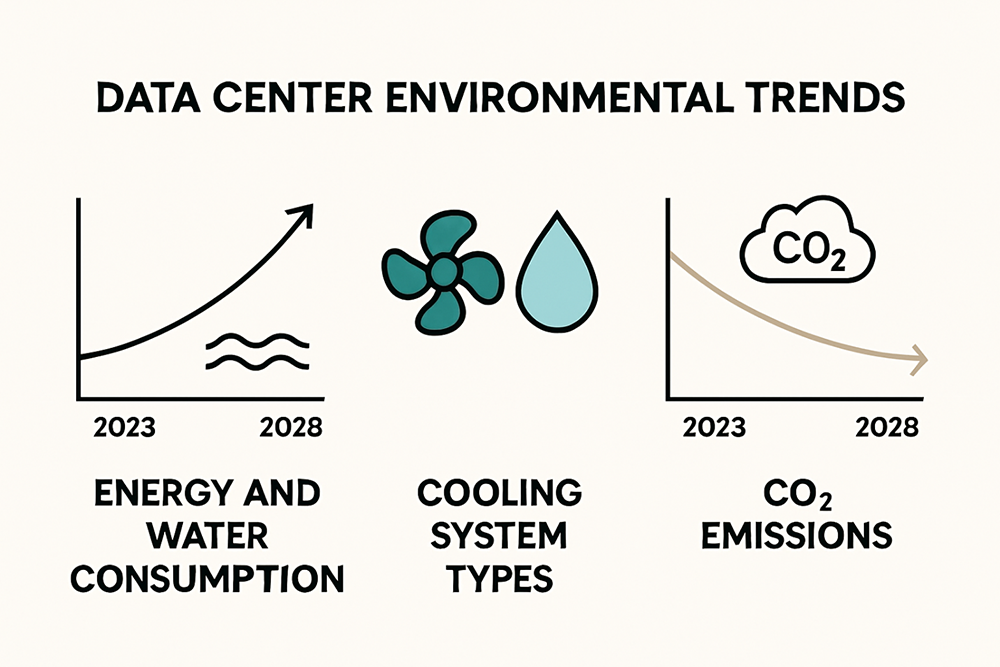

Data centers are at the heart of our digital world, powering everything from AI to streaming and banking. Yet the scale of their environmental impact is growing at a shocking pace. Electricity use from US data centers could triple to 12 percent of all power by 2028. Most would expect carbon emissions to be the main headline. Actually, the hidden water usage and sky-high cooling demands are starting to steal the show. What if the next era of data centers is defined not by power but by how cleverly we use every drop of energy and water?

| Takeaway | Explanation |

|---|---|

| Data centers’ energy use is skyrocketing. | Predicted to rise from 4.4% in 2023 to 12% by 2028, driven by AI and cloud demands. |

| Cooling systems significantly impact resource usage. | AI data centers may consume 1.7 trillion gallons of water by 2027, necessitating efficient cooling solutions. |

| Sustainable designs are essential for future data centers. | A comprehensive strategy promoting renewable energy and advanced cooling can significantly reduce environmental impact. |

| New technologies are reshaping energy efficiency. | Innovations in quantum computing and biomimetic designs promise substantial reductions in energy use. |

| Circular economy models can transform data centers. | Regenerative computing focuses on systems that produce more energy than they consume, supporting environmental restoration. |

Data centers represent a critical infrastructure backbone for modern digital technologies, but their environmental impact has become increasingly significant. Research from the U.S. Department of Energy reveals a startling trend: data centers consumed approximately 4.4% of total U.S. electricity in 2023, with projections suggesting this consumption could escalate to between 6.7% and 12% by 2028. This exponential growth is primarily driven by the expanding requirements of artificial intelligence, cloud computing, and high-performance computing systems.

The environmental footprint of data centers extends far beyond mere electricity consumption. A comprehensive study published in 2024 uncovered that U.S. data centers generate over 105 million tons of carbon dioxide equivalent (CO2e) emissions, with 56% of their energy still derived from fossil fuel sources. This carbon intensity represents a substantial environmental challenge that demands immediate and strategic intervention.

Beyond electricity, data centers pose significant challenges to water resources. Advanced computational hardware like next-generation CPUs and GPUs create increasingly dense thermal environments, necessitating extensive cooling infrastructure. Deloitte’s research highlights that AI-focused data centers could potentially consume up to 1.7 trillion gallons of freshwater by 2027, placing tremendous strain on already limited water resources.

The thermal management requirements for modern high-performance computing systems have become exponentially more complex. Machine learning and AI workloads generate substantial heat, demanding sophisticated cooling mechanisms that traditionally rely on energy-intensive refrigeration and water-based cooling systems. This creates a compounding environmental challenge where increased computational power directly translates to increased environmental stress.

Recognizing these environmental challenges, the data center industry is increasingly exploring sustainable alternatives. Strategies include transitioning to renewable energy sources, implementing more efficient cooling technologies, and designing energy-efficient hardware architectures. Some leading organizations are investing in geographically strategic locations with natural cooling advantages, such as Nordic regions, to reduce artificial cooling requirements.

Advanced power management techniques, such as dynamic voltage and frequency scaling, are being developed to optimize energy consumption without compromising computational performance. Additionally, emerging liquid cooling technologies promise significant improvements in thermal efficiency, potentially reducing both energy consumption and water usage in data center environments.

The environmental impact of data centers represents a complex intersection of technological innovation, energy infrastructure, and ecological responsibility. As computational demands continue to grow, the industry must prioritize sustainable design principles that balance technological advancement with environmental stewardship. Learn more about energy optimization strategies that can help mitigate these environmental challenges.

To help readers compare the major environmental impacts associated with data centers, the following table summarizes key resource challenges and notable figures mentioned in the preceding sections:

| Impact Area | 2023/Projected Data | Notes/Drivers |

|---|---|---|

| Electricity Consumption | 4.4% of total U.S. electricity (2023); 6.7-12% by 2028 | Driven by AI, HPC, and cloud growth |

| Water Usage | Up to 1.7 trillion gallons by 2027 (AI-focused centers) | For cooling; strain on local freshwater resources |

| Carbon Emissions | 105 million tons CO2e (U.S., 2024 study) | 56% of energy from fossil fuels |

| Cooling Demand | Escalating complexity, high resource use | Advanced CPUs/GPUs; AI thermal loads |

The computational landscape is experiencing an unprecedented surge in energy demands, driven primarily by high-performance computing (HPC), artificial intelligence, and advanced GPU technologies. Research from RAND Corporation projects that global AI data centers could require a staggering 68 gigawatts of power by 2027, nearly doubling the global data center power requirements from 2022. This projection approaches California’s entire power capacity in 2022, highlighting the exponential growth in computational energy needs.

Goldman Sachs Research forecasts an even more dramatic trajectory, predicting a 50% increase in global power demand from data centers by 2027, and a potential 165% surge by 2030 compared to 2023 levels. These projections are predominantly fueled by the rapid expansion of AI and high-performance computing technologies.

The financial landscape surrounding computational infrastructure reflects the massive energy demands. McKinsey & Company estimates that by 2030, data centers will require $6.7 trillion worldwide to meet compute power demand. Notably, AI-equipped data centers alone are projected to demand $5.2 trillion in capital expenditures, underscoring the significant economic investment required to support these energy-intensive technologies.

High-performance computing systems, particularly those dedicated to AI and machine learning, represent the most energy-intensive computational environments. Modern GPU architectures, designed for complex parallel processing, consume substantially more power than traditional computing infrastructure. These systems often require specialized cooling mechanisms and robust power delivery infrastructure to maintain optimal performance.

Addressing these escalating energy demands requires innovative approaches to power management and computational efficiency. Emerging technologies are focusing on developing more energy-efficient GPU architectures, advanced cooling solutions, and intelligent power management algorithms. Learn more about high-performance computing strategies that can help organizations optimize their computational infrastructure.

The intersection of advanced hardware design, sophisticated cooling technologies, and intelligent power management represents a critical frontier in managing the environmental impact of high-performance computing. As AI and computational demands continue to grow, the industry must balance performance requirements with sustainable energy consumption strategies. The future of computing will increasingly depend on our ability to develop more energy-efficient technologies that can deliver unprecedented computational power while minimizing environmental impact.

To clarify projected growth and scale, here is a table summarizing key statistics and forecasts for energy and economic demands in data centers:

| Category | 2023/2027/2030 Data | Source & Notes |

|---|---|---|

| U.S. Data Center Energy Use | 4.4% (2023), up to 12% (2028) | U.S. Dept of Energy, rapid AI/HPC growth |

| Global AI Data Center Power | 68 GW required (2027) | RAND Corp; nearly double 2022 levels, rivals CA’s capacity |

| Power Demand Growth | +50% (2027), +165% (2030 vs 2023) | Goldman Sachs, driven by AI expansion |

| Capital Expenditure Needed | $6.7T (total, by 2030) | McKinsey, to meet overall data center compute demand |

| AI Data Center CapEx | $5.2T (by 2030) | McKinsey, specific to AI-equipped centers |

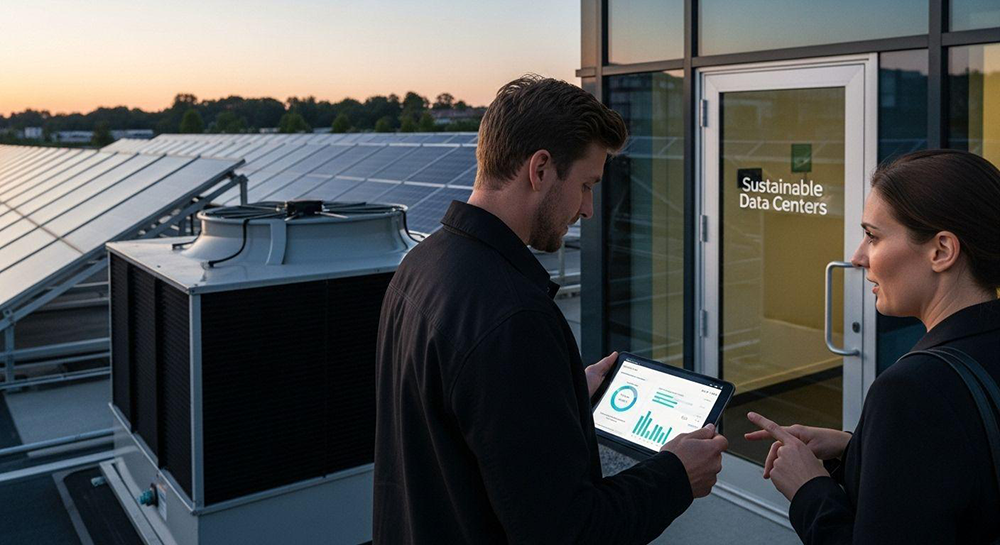

Addressing the environmental challenges of data centers requires a multifaceted approach that goes beyond traditional energy management. The International Telecommunication Union (ITU) outlines a comprehensive six-dimensional strategy for creating sustainable data center ecosystems. These dimensions include climate-resilient design, sustainable building practices, energy-efficient information and communication technologies (ICT), renewable energy integration, advanced cooling methods, and responsible e-waste management.

Organizations are increasingly recognizing that sustainability is not just an environmental imperative but also a critical business strategy. The World Bank and International Telecommunication Union report emphasizes the importance of greening data centers to support sustainable digital transformation, highlighting the interconnected nature of technological advancement and environmental responsibility.

One of the most promising areas of innovation in data center sustainability is cooling technology. Harvard University’s research reveals groundbreaking approaches to thermal management, including direct-to-chip cooling technologies. This method involves applying coolant directly to heat-generating components, dramatically reducing power consumption compared to traditional cooling mechanisms.

Moreover, innovative heat recycling strategies are emerging as a game-changing approach to energy efficiency. Some advanced data centers are now exploring ways to repurpose waste heat generated by computing infrastructure. By redirecting this thermal energy to heat nearby buildings or industrial processes, data centers can transform what was previously considered waste into a valuable energy resource. This approach not only reduces overall energy consumption but also creates a more circular and sustainable computing ecosystem.

Transitioning to renewable energy sources represents a critical strategy for sustainable data centers. Leading organizations are investing in on-site solar and wind generation, power purchase agreements with renewable energy providers, and innovative energy storage solutions. The goal is to minimize reliance on fossil fuel-based electricity and create a more sustainable computational infrastructure.

Green building standards are also playing an increasingly important role. Data center designs are incorporating sustainable materials, maximizing natural cooling opportunities, and implementing advanced energy management systems. These strategies go beyond mere energy efficiency, considering the entire lifecycle environmental impact of data center infrastructure. Learn more about energy optimization strategies that can help organizations transform their computational infrastructure.

As computational demands continue to grow, the data center industry stands at a critical juncture. The strategies implemented today will determine not just the environmental impact of digital technologies, but also their long-term economic and ecological sustainability. By embracing comprehensive, innovative approaches to energy management, cooling technologies, and renewable infrastructure, data centers can become powerful agents of positive environmental change.

The future of data center sustainability hinges on breakthrough innovations that fundamentally reimagine computational infrastructure. Researchers and engineers are developing cutting-edge technologies that promise to dramatically reduce environmental impact while maintaining high-performance computing capabilities. Quantum computing and neuromorphic engineering represent two revolutionary approaches that could potentially transform energy consumption in computational systems.

Quantum computing, with its fundamentally different computational architecture, offers the potential for exponentially more energy-efficient processing. Unlike traditional silicon-based computing, quantum systems can perform complex calculations using significantly less energy. Research from MIT suggests that quantum computers could potentially reduce energy consumption by up to 90% for certain computational tasks, representing a transformative approach to sustainable high-performance computing.

Biomimetic design is emerging as a powerful strategy for creating more sustainable computing infrastructure. Researchers are drawing inspiration from natural systems to develop more energy-efficient cooling and computational technologies. Harvard University’s Wyss Institute has been pioneering cooling technologies that mimic biological heat dissipation mechanisms, potentially reducing data center cooling energy requirements by up to 40%.

Advanced materials like graphene and carbon nanotubes are also showing remarkable potential for creating more energy-efficient computational components. These materials offer superior thermal conductivity and electrical properties compared to traditional silicon-based technologies. Some cutting-edge research indicates that these materials could enable computational systems with dramatically reduced energy consumption and improved performance characteristics.

The concept of regenerative computing is gaining traction as a holistic approach to sustainable technological infrastructure. This model goes beyond traditional energy efficiency, focusing on creating computational systems that actively contribute to environmental restoration. Innovative approaches include designing data centers that generate more energy than they consume, integrate carbon capture technologies, and create closed-loop systems that minimize waste and maximize resource utilization.

Some forward-thinking organizations are exploring ways to transform data centers from energy consumers to net-positive environmental contributors. This involves integrating renewable energy generation, implementing advanced recycling technologies for electronic components, and developing computational infrastructures that can actively support environmental monitoring and restoration efforts. Learn more about energy optimization strategies that are shaping the future of sustainable computing.

As we approach 2025, the landscape of data center sustainability is rapidly evolving. The convergence of advanced materials, biomimetic design, quantum computing, and regenerative infrastructure models promises a future where computational technologies can simultaneously drive technological innovation and environmental stewardship. The most successful organizations will be those that view sustainability not as a constraint, but as a fundamental driver of technological advancement.

Data centers are responsible for approximately 4.4% of total U.S. electricity consumption as of 2023, and this figure is projected to rise to between 6.7% and 12% by 2028 due to increasing demands for AI and high-performance computing.

Advanced data centers, particularly those focused on AI, could consume up to 1.7 trillion gallons of freshwater by 2027 for cooling purposes. This high water usage puts significant pressure on limited freshwater resources.

Strategies for sustainable data centers include transitioning to renewable energy sources, implementing advanced cooling technologies, optimizing energy efficiency through improved hardware design, and repurposing waste heat.

Emerging technologies like quantum computing, biomimetic designs, and advanced materials hold the potential to dramatically reduce energy consumption and improve efficiency in data centers, allowing them to operate more sustainably while meeting high-performance demands.

Rising energy costs, water usage, and complex cooling requirements from AI and HPC workloads are putting pressure on every organization. If your enterprise feels the strain from growing power consumption and resource demands, it’s vital to find smarter infrastructure solutions. As highlighted in this article, optimizing your compute power without sacrificing sustainability is no longer optional. It’s the only way forward for leaders in AI, research, and data-driven industries.

Get immediate access to modern, energy-efficient GPU servers and AI-ready systems designed for performance and responsible resource use. The Nodestream Marketplace empowers you to buy, sell, or upgrade high-performance infrastructure with complete transparency and real-time inventory, so you meet demands without overspending or wasting resources.

Act now. Streamline your next hardware investment and help reduce your center’s environmental impact by sourcing smarter, more efficient solutions at Nodestream. Learn more about securing the right system for your sustainability goals and evolving workloads today.